Topic 4b: Integration

Contents

Topic 4b: Integration#

import scipy.integrate as integrate

import scipy.special as special

import numpy as np

import matplotlib.pyplot as plt

The next obvious numerical tool you will encourter in numerical integration. This is also a good time to introduce special functions, because often the hardest problem is how to integrate these kinds of special functions.

Let’s start with just doing a basic one-dimensional integral.

integrate.quad(lambda x: np.sin(x),0,2*np.pi)

(2.221501482512777e-16, 4.3998892617845996e-14)

The first number it returns is the intergal. The second is the estimated error.

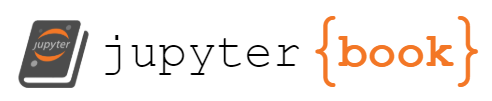

It doesn’t seem any harder to integrate some less well-known functions. Here is a Bessel J function:

xlist=np.arange(0,10,0.01)

plt.plot(xlist,special.jv(5,10*xlist))

plt.show()

Here is the integral of the function

integrate.quad(lambda x: special.jv(5,10*x),0,10)

(0.09708600454893376, 2.3560112202641e-10)

We can even integrate all the way to \(x = \infty\):

integrate.quad(lambda x: np.exp(-x**3/2),0,np.inf)

(1.1250836837509395, 3.932597111288013e-09)

However, you will encounter a lot more errors when you integrate to infinity:

integrate.quad(lambda x: special.jv(5,10*x),0,np.inf)

/var/folders/vh/rcz751b57355h3qzj37sc3080000gp/T/ipykernel_763/2798365077.py:1: IntegrationWarning: The integral is probably divergent, or slowly convergent.

integrate.quad(lambda x: special.jv(5,10*x),0,np.inf)

(-5.118808731259237, 6.0995848282412135)

Notice that there estimated error is large, so we should be careful about integrating to infinity.

Making our own integrator#

As with equation solving, we can learn a lot about the code by trying to do it ourselves. At the end of the day, one-dimensional integration is just adding up a bunch of areas.

def my_integrate(f,a,b,dx):

x=np.arange(a,b,dx)

y=f(x)

return (y.sum()-(y[0]+y[-1])/2.)*dx # we are adding the ar

my_integrate(lambda x: np.sin(x),0,2*np.pi,0.0000001)

-1.1031456935672159e-14

Okay, that seems like it is working okay. Let’s try it on a bit harder integral

import time

t1 = time.time()

print(my_integrate(lambda x: special.jv(5,10*x),0,10,0.000001))

print(time.time() - t1)

0.09708607874484464

5.23979115486145

t1 = time.time()

print(integrate.quad(lambda x: special.jv(5,10*x),0,10))

print(time.time() - t1)

(0.09708600454893376, 2.3560112202641e-10)

0.0013849735260009766

Wow! Scipy got an answer 1000 times faster. If we wanted to get an answer that fast we would lose a lot of accuracy

t1 = time.time()

print(my_integrate(lambda x: special.jv(5,10*x),0,10,0.01))

print(time.time() - t1)

0.09784348811546184

0.0010709762573242188

Accuracy setttings#

We can guess that, although scipy is much faster, it must also have some kind of accuracy settings just like ours

a=integrate.quad(lambda x: np.sin(100*x)*np.exp(-x**2),0,np.inf)

a

/var/folders/vh/rcz751b57355h3qzj37sc3080000gp/T/ipykernel_763/789010562.py:1: IntegrationWarning: The maximum number of subdivisions (50) has been achieved.

If increasing the limit yields no improvement it is advised to analyze

the integrand in order to determine the difficulties. If the position of a

local difficulty can be determined (singularity, discontinuity) one will

probably gain from splitting up the interval and calling the integrator

on the subranges. Perhaps a special-purpose integrator should be used.

a=integrate.quad(lambda x: np.sin(100*x)*np.exp(-x**2),0,np.inf)

(0.010002000716284383, 5.772468964390598e-07)

The exact answer is 0.010002001201201683031…

exact = 0.01000200120120168303067014934894552744967

a[0]-exact

-4.849173007581697e-10

The first thing we can do is to allow a finer spacing on the points used in the itegral by increasing “limit” (default=50)

b=integrate.quad(lambda x: np.sin(100*x)*np.exp(-x**2),0,np.inf,limit=10000)

b

(0.010002000775794492, 1.4393504000271286e-08)

b[0]-exact

-4.254071921117042e-10

This helps a bit, but not by much. The next thing we can do is try to force it to tolarate less error.

c=integrate.quad(lambda x: np.sin(100*x)*np.exp(-x**2),0,np.inf,limit=1000,epsabs=1.49e-14, epsrel=1.49e-14)

c

(0.010002001201201975, 1.4115117890339927e-14)

c[0]-exact

2.914335439641036e-16

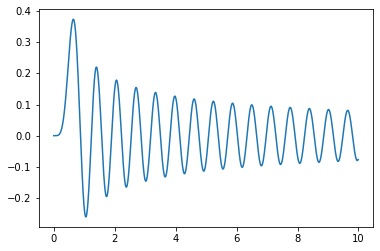

Unforunately, there are still plenty of functions that are very challenging in integrate numerically. This is particularly a problem if we have to integrate a highly oscilatory function over a lot of cycles:

fig,ax=plt.subplots(figsize=(12,6),ncols=2)

x_in=np.linspace(0,10,10000)

ax[0].plot(x_in,np.sin(100*x_in)*np.exp(-0.01*x_in))

ax[1].plot(x_in,np.sin(100*x_in)*np.exp(-0.01*x_in))

ax[1].set_xlim(0,1)

(0.0, 1.0)

We know how to integrate this function analytically so that \(\int_0^\infty dx \sin(100 x) e^{-0.01 x}\approx 100/(100^2+0.01^2) \approx 0.01\).

Let’s try integrating this numerically as before with higher accuracy settings:

integrate.quad(lambda x: np.sin(100*x)*np.exp(-0.01*x),0,np.inf,limit=100000,epsabs=1.49e-14, epsrel=1.49e-14)

/var/folders/vh/rcz751b57355h3qzj37sc3080000gp/T/ipykernel_763/3525455102.py:1: IntegrationWarning: The occurrence of roundoff error is detected, which prevents

the requested tolerance from being achieved. The error may be

underestimated.

integrate.quad(lambda x: np.sin(100*x)*np.exp(-0.01*x),0,np.inf,limit=100000,epsabs=1.49e-14, epsrel=1.49e-14)

(0.007655040627823725, 0.6746958696328023)

The answer itself is is off by 24%, but the estimated error is very large (if we didn’t know the exact answer, it would be hard to use the result).

One strategy we can try is splitting the integral into two regions, one finite one where we expect most of the area to lie:

integrate.quad(lambda x: np.sin(100*x)*np.exp(-0.01*x),0,10000,limit=100000,epsabs=1.49e-14, epsrel=1.49e-14)

/var/folders/vh/rcz751b57355h3qzj37sc3080000gp/T/ipykernel_763/1510946524.py:1: IntegrationWarning: The occurrence of roundoff error is detected, which prevents

the requested tolerance from being achieved. The error may be

underestimated.

integrate.quad(lambda x: np.sin(100*x)*np.exp(-0.01*x),0,10000,limit=100000,epsabs=1.49e-14, epsrel=1.49e-14)

(0.009999999899992926, 7.216245054811513e-13)

… and one that is small

integrate.quad(lambda x: np.sin(100*x)*np.exp(-0.01*x),10000,np.inf,limit=100000,epsabs=1.49e-14, epsrel=1.49e-14)

(-3.669156602286988e-44, 1.6875830724635754e-42)

For our purposes this error is totally fine (the integral is very small, which is all we need). However, we can sometimes get better performance by rescaling the intergation variable:

integrate.quad(lambda x: np.sin(1000000*x)*np.exp(-100*x)/10000.,1,np.inf,limit=100000,epsabs=1.49e-14, epsrel=1.49e-14)

(-2.0027823862785398e-50, 3.6175873463225482e-50)

This trick is only helpful when integrating to infinity. On any finite domain, we are usually doing a Riemann sum that doesn’t care about the lables of your variable. When integrating to infinity, the computer is doing a bit more under the hood and so sometimes you have to trick it into doing the problem in an easier way.

Multi-dimensional Integration#

Multi-dimension integration is where we see the real need to use the lambda notation. Suppose we want to perform an integral: $\(\int_0^1 dx \int_0^x dy \sin(x y)\)$ If we thought of this as two seperate integral, the boundaries of integration in x become part of the function we integrate in y.

integrate.dblquad(lambda y,x: np.sin(y*x),0,1,lambda x:0,lambda x:x)

(0.11990587100028235, 5.074216840967778e-15)

Okay - so we can do this kind of integral no problem. But what exactly is going on? Let’s start by separating off the function we want to integrate:

def f2d(y,x):

return np.sin(x*y)

integrate.dblquad(f2d,0,1,lambda x:0,lambda x:x)

(0.11990587100028235, 5.074216840967778e-15)

Okay, so everything else has to be the limits of integration. Let’s also give those names so that we have $\(\int_a^b dx \int_{g(x)}^{h(x)} dy \sin(x y)\)$

a=0

b=1

def g(x):

return 0.

def h(x):

return x

integrate.dblquad(f2d,a,b,g,h)

(0.11990587100028235, 5.074216840967778e-15)

This way of doing it makes it more clear what we are doing. In particular, y is the variable of integration that runs to enpoints that are functions of x. Therefore, we have to feed it a function, even if we only want that function to return 1. For example, to implement $\(\int_0^1 dx \int_{0}^{1} dy \sin(x y)\)$ we still need to include either functions or lambda operators because they still could have been interesting functions of x

integrate.dblquad(lambda y,x: np.sin(y*x),0,1,lambda x:0,lambda x:1)

(0.2398117420005647, 5.094460170817101e-15)

We could have also tried to do this as two different one dimensional integrals. As one goes to higher dimensional integration, the integrals will often be too slow to calculate using some generic tool like quad and it can be more useful to precompute some of the integrals first.

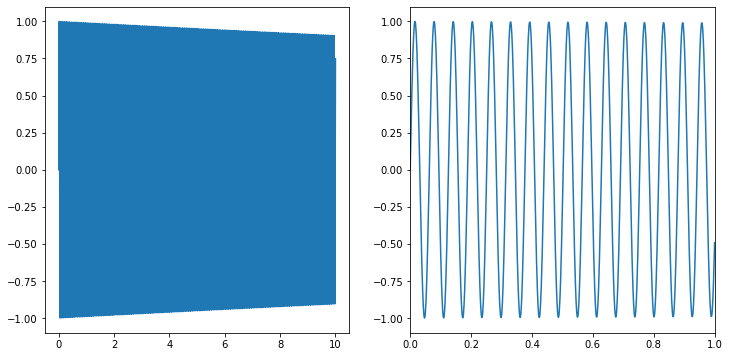

Let’s try this on this example by calculating the first integral at a few points:

xlist=np.arange(-0.01,1.01,0.01)

int_x=np.zeros(len(xlist))

for i in range(len(xlist)):

int_x[i]=integrate.quad(lambda y: np.sin(y*xlist[i]),0,xlist[i])[0]

plt.plot(xlist,int_x)

plt.show()

from scipy import interpolate

int_func= interpolate.interp1d(xlist,int_x,kind='cubic')

integrate.quad(int_func,0,1)

(0.11990587109034648, 6.399693853478578e-12)

dlbquad_sol=integrate.dblquad(f2d,0,1,lambda x:0,lambda x:x)[0]

quad_int_sol=integrate.quad(int_func,0,1)[0]

dlbquad_sol-quad_int_sol

-9.006412282630549e-11

We see that we get a surprisingly accurate answer. This is a somewhat helpful property of integration: when we calculate the area, small random errors tend to average out. In many circumstances, numerical integration is a highly effective way to find your answers.

Summary#

Numerical integration is a powerful tool that is fairly easy to use and understand. When integrating over a few variables, on a finite domain, it is often pretty straightforward to get accurate answers. We saw it is a bit trickier on infinite domains. We will see in a later lecture that it also becomes difficult when we increase the number of variables we are integrating over (dimensionality).